Advanced Topics in AI Schedule

A Deep Dive into AI at Scale - Part 1

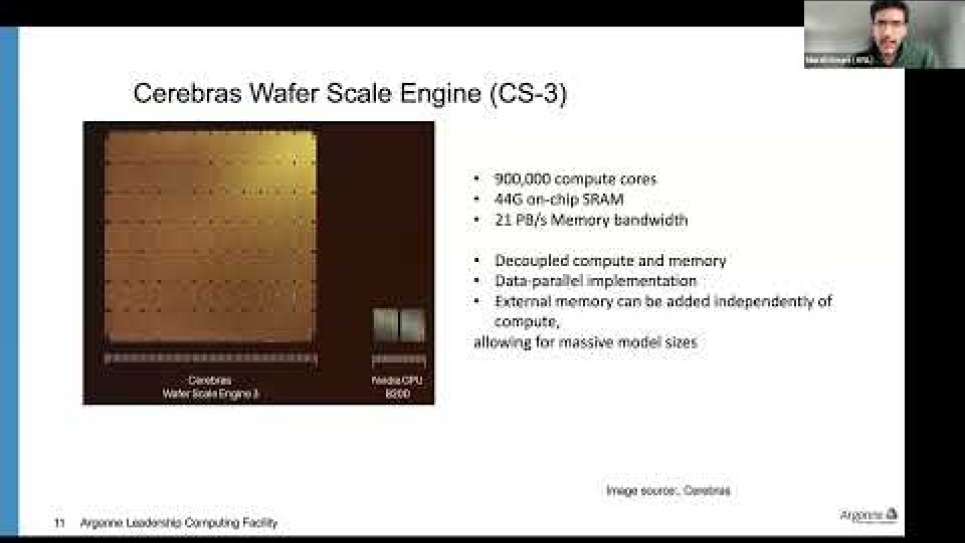

This session will cover the basics of distributed data parallel training of large models. Knowledge of these techniques is essential in order to deploy strategies that ensure efficient usage of resources for larger and larger datasets.

Science Talk: Deep Learning Applications on Scientific Visualization

Recent advances in deep learning have opened new directions for scientific visualization. In this talk, I will present our recent work that enables interactive flow visualization and 3D reconstruction using 3D Gaussian Splatting for scientific data, highlighting how deep learning techniques can enhance scalability, interactivity, and visual quality in scientific visualization.

A Deep Dive into AI at Scale - Part 2

Training large AI models in a reasonable time requires extensive computing resources. In this sesssion, we will discuss advanced strategies for distributed training of AI Models, including forms of data, model, and optimizer parallelisms such as TP, PP, ZeRO, and FSDP.

Science Talk: AI as a Tool in Computational Astrophysics: Progress and Lessons Learned

Artificial intelligence is increasingly used to tackle computational challenges in astrophysics (and beyond). This talk reviews the current state of the field of Astro-AI and presents recent examples that demonstrate how machine learning accelerates simulations and enables new discoveries. I will discuss my PhD work on stellar feedback emulation to illustrate both the promise of AI-driven approaches and the importance of framing AI as a refined tool to solve specific computational challenges.

Coupling Simulations with AI

Scientists often combine AI with simulations. In this session, we will cover why this coupling is helpful, how to do it, and performance considerations. We will demonstrate how to use two workflow tools for a scientific use case.

Science Talk: ChemGraph: An Agentic Framework for Computational Chemistry Workflows

Agentic AI systems powered by large language models are transforming the way scientists interact with computational tools. In this talk, I will introduce ChemGraph, an agentic AI framework for computational chemistry and materials science. ChemGraph automates atomistic simulations through natural language queries and intelligent task planning, enabling more efficient, accessible, and autonomous research workflows.

Inference Workflows

Benoit Côté and Aditya Tanikanti will present ALCF Inference service, an HPC-scale Inference-as-a-Service platform that enables dynamic deployment of large language models across GPU clusters. The talk will cover its architecture, scheduling framework, and real-world LLM use cases across scientific domains.

Thang Pham and Murat Keçeli will discuss LangGraph, a framework for building agentic AI systems, and provide a hands-on tutorial on constructing single-agent and multi-agent workflows. The session will demonstrate how these agents can be built, adapted, and applied across diverse scientific domains to automate research tasks.

Science Talk: Hybrid Pre-training of large models by leveraging Low-rank adapters

With increasing size of large models, the cost of training them is becoming prohibitively expensive. In this session I will highlight a recent work where we employed a fine-tuning technique in pre-training and reduced the parameter count of a Vision Transformer model to 10% of the original that saved 9 hours of training time while using 64 GPUs while maintaining the accuracy.